Project Description

The Archaiv project uses AI to easily catalogue images and documents to make archives accessible. Supported by a rigorous quality assurance process to manage biases and ethics.

Problem definition:

Digital archives have traditionally required tagging so users can search accurately. How can we develop an easier way for archivists and the community to summarise their documents, and make it easier for their users to access what they want?

We are going to use LLMs to allow searching for relevant documents using natural language, this AI will be known as Archaiv.

In the future, we will use human validation to increase the accuracy of the model. Using community reinforcement learning will allow marginalised groups to address bias in the model.

Stakeholders:

- Historians, Librarians and Researchers

- Dementia Patients

- First Nations people

- The community

- ACT Memory users

- Users of the Public Record Office Victoria photographic collections

AI Safety (Governance) Framework:

Managing the biases in an AI and ensuring it is used in an ethical manner is very important. An oversight Governance Board will be established to ensure Archaiv’s biases are understood and managed. This includes identifying the biases and accounting for them by manually tagging items that fall outside the average of the collection and reviewing any identifications that have been flagged as incorrect. Archaiv will be regularly reviewed and improved through this process to increase the quality of the search results and reduce the risks, such as discrimination, from using AIs for this purpose. The members of this board will be diverse in terms of age, race and gender and particular experience applicable to the archives/ data sets or research projects that are using Archaiv. This board will include Aboriginal and Torres Strait Islander representatives. This board will have strong expertise in data management, ethics and AI development.

According to US Congress, "the term 'artificial intelligence' means a machine-based system that can, for a given set of human-defined objectives, make predictions, recommendations or decisions influencing real or virtual environments" (William M. (Mac) Thornberry National Defense Authorization Act, 2020, p. 1164). By labelling media, Archaiv makes decisions in a virtual environment, that are subject to community review. Therefore Archaiv removes the need for a human cataloguer to go through the trove of information.

Adoption rates of AI across Australia remain relatively low. One factor influencing adoption is the low levels of public trust and confidence of Australians in AI technologies and systems. To enable public trust, the team has illustrated below how Archaiv was assessed against the ethics triangle and the EU’s AI risk assessment framework, as well as how Archaiv conforms with national and state based AI principles.

Advanced technologies utilising AI, ML, and autonomy lead to significant ethical and moral challenges, these are discussed in the document Supporting responsible AI: discussion paper - Department of Industry, Science and Resources. These challenges can be examined using three alternative approaches in the ethical triangle of rules or principles, outcomes or consequences, and virtues or beliefs.

- Archaiv passes the the test on applying the principles-based lens, as illustrated in detail below in the principles tables from the Australian AI ethics framework as well as the NSW AI ethics framework.

- The outcomes lens examines the efficacy of an action or inaction, and in this case Archaiv’s benefits have been assessed as outweighing potential harms. The team has also taken additional steps in minimising harm, through minimising bias in the model.

- As Archaiv is transparent about its use of AI and is not aiming to deceive, it passes the ethics test from the virtues lens as well.

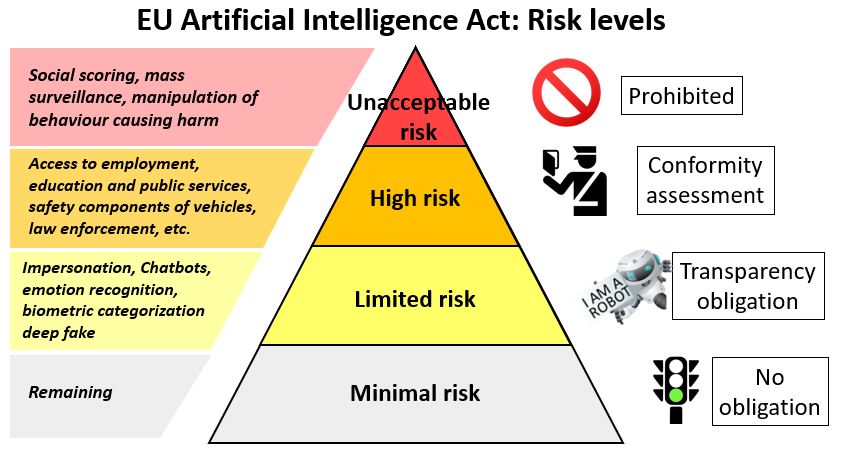

The European Union’s Artificial Intelligence Act identifies four levels of risk, with the highest level being prohibited and the lowest level being minimal risk uses that will not be subject to any obligations, though the adoption of voluntary codes of conduct is recommended. The third level ‘Limited Risk’, is where Archaiv sits because it includes chatbots and biometric recognition.

Archaiv fulfils its transparency obligations by making it clear to website users that AI was utilised in the labelling (hence the need for crowdsourcing verification). The project does not conduct social scoring, but the community is free to make judgements on the labels through the ‘thumbs up’ thumbs down’ feature, which is then subject to the Governance Board’s review.

Principles at a glance

Australia’s 8 Artificial Intelligence (AI) Ethics Principles are designed to ensure AI is safe, secure and reliable. Here is how we have ensured Archaiv applies these principles in its model:

Principle 1) Human, societal and environmental wellbeing: AI systems should benefit individuals, society and the environment. Archaiv provides positive health, wellbeing and societal outcomes.

Principle 2) Human-centred values: AI systems should respect human rights, diversity, and the autonomy of individuals. Archaiv accounts for diversity by understanding the biases in the AI model and adjusting it as required.

Principle 3) Archaiv accounts for accessibility including using a Screen reader descriptions of photos. Archaiv endeavours to protect against unfair discrimination by having a strong quality control process.

Principle 4) Privacy protection and security: AI systems should respect and uphold privacy rights and data protection, and ensure the security of data. Archaiv uses data that is publicly available from official government archives.

Principle 5) Reliability and safety: AI systems should reliably operate in accordance with their intended purpose. Archaiv has been developed for a specific purpose and the inherent risks of using AI for this purpose are understood and managed.

Principle 6) Transparency and explainability: There should be transparency and responsible disclosure so people can understand when they are being significantly impacted by AI, and can find out when an AI system is engaging with them. The inherent biases in Archaiv will be studied, managed and documented. This will include a check box acknowledgement by all users of the biases and risks of using AI when they login to use Archaiv.

Principle 7) Accountability: People responsible for the different phases of the AI system lifecycle should be identifiable and accountable for the outcomes of the AI systems, and human oversight of AI systems should be enabled. The Governance Board will be responsible for the use of Archaiv. The Board members names and contact details will be made publicly available.

Archaiv also abides by the Australian Society of Archivists values:

- an open, inclusive and diverse archival profession

- efficient management of records as evidence, memory and history of our democracy, business, and cultures

- social and organisational accountability through preservation, availability and use of records and archives

- research into archival and recordkeeping theory and practice, including digital recordkeeping, to stay ahead in a digital and disruptive society

- standards of recordkeeping and archival education, practice and conduct.

NSW Ethics Framework:

Team Picture Perfect also had a look at the NSW ethics framework.

Community benefit

Archaiv demonstrates a clear community and government benefit or insight will be delivered. Other solutions were considered and ruled-out because they will not realise the benefits of an AI solution.

Fairness

Archaiv incorporates safeguards to manage data bias and data quality risks. The model is designed with a focus on diversity and inclusion. Regular monitoring of outputs has been factored in through the community being able to review and submit for assessment Archaiv’s outputs to a Governance Board.

Privacy and security

Archaiv uses NSW and ACT Government open data. The Government has accounted for privacy concerns, by either providing details of how information will be used and shared in the Privacy Collection Notice provided at the time of collection, or aligning with the the Privacy and Personal Information Protection Act 1998 as appropriate.

Transparency

Archaiv’s review mechanisms will ensure citizens can question and challenge AI-based outcomes.

Accountability

Archaiv makes ‘decisions’ or assessments about media in a virtual environment. A review and assurance process as explained above has been put in place for both the development of Archaiv and its outcomes.